Disinformation Is a Weapon. We Need a Manual.

An examination of DISARM, the open-source framework

It is no secret that authoritarian regimes and opportunistic actors have weaponized the information sphere. From false narratives about vaccine safety to Russian influence operations targeting European unity, manipulation campaigns have sown confusion and distrust. Yet the tools we use to map these campaigns remain ad hoc. In an era when information itself is a battlefield, the Disinformation Analysis and Risk Management framework, or DISARM, offers a structured approach for naming and fighting these threats.

An Origin in Cybersecurity

DISARM did not spring from nowhere. In 2018, a small group of researchers and technologists met at a hybrid warfare workshop and sketched out what they felt was missing in the fight against disinformation. One year later, with backing from philanthropist Craig Newmark, they produced an initial framework called AMITT — for Adversarial Misinformation and Influence Tactics & Techniques. AMITT immediately found takers. Analysts at the United Nations, the World Health Organization and the North Atlantic Treaty Organization began adopting it.

Shortly afterward, engineers at MITRE Corporation, the nonprofit known for mapping cyber‑attacks in its ATT&CK matrix, started a related project named SP!CE. The two frameworks were eventually merged, and by late 2021 an independent DISARM Foundation was formed. The goal was to ensure that this new taxonomy would remain open‑source and community‑driven.

What DISARM Does

At its core, DISARM is not an algorithm but a lexicon. It organizes disinformation campaigns into phases and catalogues the tactics and techniques used by threat actors. Analysts using DISARM can classify an operation’s objectives, the actions taken to achieve them and the ways those actions are strung together. This is modeled on MITRE’s ATT&CK, which gave cybersecurity professionals a way to talk about hackers’ playbooks.

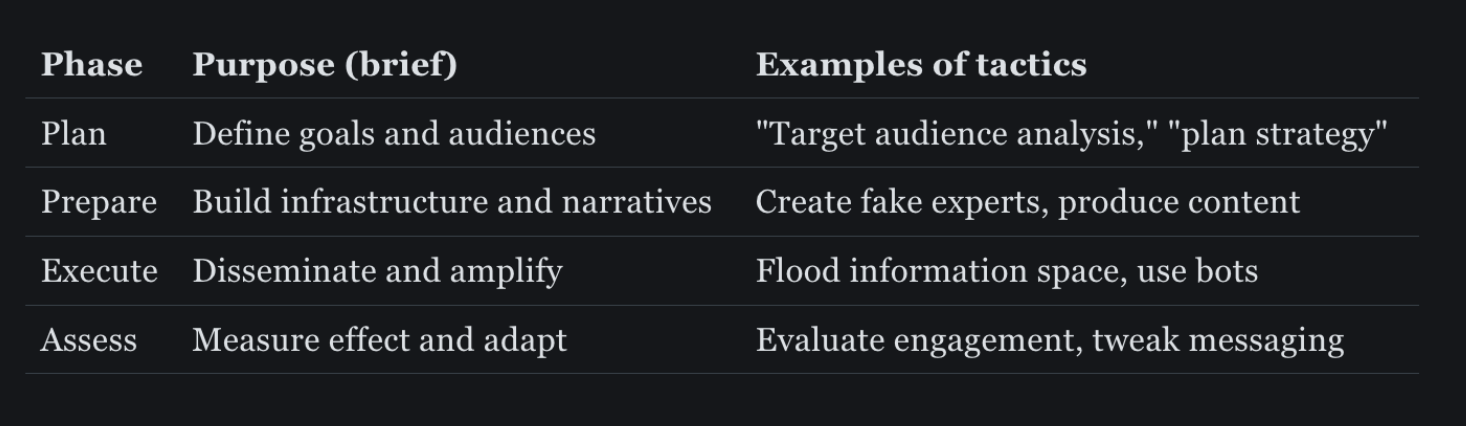

The DISARM framework divides a disinformation operation into four broad phases — Plan, Prepare, Execute and Assess. In the Plan phase, organizers decide on objectives and target audiences. During Prepare, they build assets: fake personas, inauthentic pages and content factories. Execute covers the dissemination of messages across social media, chat apps or traditional media. Finally, in Assess, they measure impact and refine their playbook.

DISARM actually comprises two linked frameworks: Red, which catalogues malicious behaviour, and Blue, which suggests counter‑measures. The Red framework lists hundreds of tactics and techniques, from "Develop Narratives" to "Maximize Exposure" and "Create Inauthentic Pages." The Blue framework, in contrast, provides a menu of possible responses, including fact‑checking, community engagement, content takedowns and legal sanctions. Analysts map an incident to the Red list and then choose appropriate options from the Blue list.

Why It Matters

DISARM’s advocates say the framework brings order to a chaotic field. By tagging incidents consistently, organisations can share intelligence and compare cases. Debunk.org uses DISARM to support its monitoring operations and highlights three benefits: documenting incidents, fostering information sharing and providing a foundation for training. The European Digital Media Observatory notes that DISARM draws from MITRE’s ATT&CK and is intended to give analysts, decision‑makers and communicators a shared understanding of disinformation. Alliance4Europe runs courses that teach practitioners how to tag reports and develop counter‑strategies using the framework.

For defence‑tech startups, the implications are clear. Systems designed for contested environments — whether drones, secure communications or autonomous platforms — must account for psychological operations. Integrating DISARM’s taxonomy into threat models helps engineers anticipate how adversaries might exploit online narratives to disrupt operations. It also positions firms to meet emerging regulatory requirements around FIMI (Foreign Information Manipulation and Interference), as governments adopt DISARM for formal data exchange.

A Framework With Blind Spots

Despite its promise, DISARM is not a panacea. Researchers at the Data Knowledge Hub caution that as techniques evolve, categorising them becomes difficult and the framework cannot yet capture offline manipulation or closed communication channels. A 2024 analysis by the EU DisinfoLab praised the Blue framework but stopped short of endorsing all its counter‑measures, arguing that some recommended responses are ineffective or incomplete. That study grouped responses into five categories — exposure, community engagement, distribution control, infrastructure disruption and legal sanctions — and developed its own metrics to evaluate cost‑effectiveness and unintended consequences. In other words, DISARM offers a starting point, not a complete response plan.

Aligning With a Multipolar Reality

The global information space today is contested by authoritarian powers, non‑state actors and domestic extremists alike. A multipolar world demands a multipolar approach to defence. DISARM, while imperfect, provides a common language that organisations on both sides of the Atlantic are starting to speak. If Western democracies and their defence contractors hope to remain resilient, they must take cognitive security as seriously as missile defence. That means investing in frameworks like DISARM, training analysts who understand them and building products that integrate them by design.

No framework can eliminate disinformation, but failing to use one all but guarantees you will be blindsided. DISARM is a tool; like any tool, its value depends on those who wield it. The choice for defence‑tech innovators is whether to treat the information domain as a battlefield — or to cede it to those who already do.